The Artificial Intelligence (AI) Act entered into force on 1 August 2024 and will be broadly applicable after two years, following a phased implementation process. Thus, most obligations under the Act must be met by 2 August 2026, with exceptions to this timeline mentioned throughout this article.

The Act applies to any organisation, irrespective of its location, that offers AI systems to individuals within the EU. This includes, providers and deployers of AI systems within the EU, providers and deployers of AI systems located outside the EU, if the output of those systems is used within the EU, importers and distributors of AI systems and product manufacturers who integrate AI systems into their products and place them on the EU market under their own brand name or trademark.

It must be noted that the Act also provides for exceptions, including, but not limited to, public sector bodies and international organisations located outside the EU, AI systems used for military or defence purposes and AI systems developed exclusively for scientific research and development.

Risk Classification

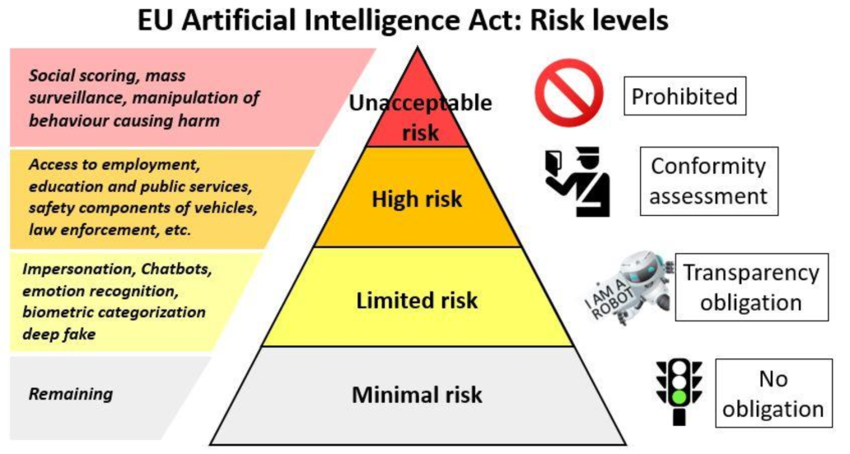

The AI Act implements a risk-based approach that emphasises the application of AI rather than the technology itself. AI systems are classified according to their applications and the level of risk they pose to users as well as society. This means that different AI applications are treated according to the potential threats they pose, with obligations increasing in accordance with this risk level.

AI applications, therefore, fall into one of four categories, as shown in the following diagram:

Source: ReseachGate

Unacceptable Risk

Applications that fall into the first category, unacceptable risk, are strictly prohibited as these applications present a distinct threat to people’s rights, safety and livelihoods. Following the AI Act’s entry into force, a six-month transition period will be granted before the ban on such systems takes effect.

High-Risk

High-risk AI systems are those that adversely affect safety or fundamental rights and are therefore subject to rigorous requirements including a conformity assessment that must be met both before market entry and throughout their operational life.

An AI system may be deemed high-risk in two instances. The first instance is when the AI system itself or its safety component, falls under the EU legislation listed in Annex I of the AI Act and includes, among other things, civil aviation, marine equipment, medical devices, toys and machinery. The regulations governing this category will take effect 36 months after the Act’s entry into force.

The second instance arises if the AI system is listed in Annex III of the AI Act, which covers areas such as biometrics, critical infrastructure and migration, asylum and border control management. The rules for these systems will come into effect 24 months after the Act’s entry into force.

Limited Risk

AI applications that pose limited risk to people are primarily required to adhere to transparency requirements, which demand that users be informed when they are engaging with AI-generated content. Deepfakes, which include AI generated text, video or audio, must thus be clearly labelled as AI generated. Similarly, chatbots are required to inform users that they are interacting with a machine, thereby affording them the opportunity to request human assistance instead.

It is important to note that these transparency requirements are also applicable to higher risk AI systems, which must be met together with their additional particular regulatory obligations.

Minimal or No Risk

Minimal or no risk AI systems include AI-enabled video games, inventory management systems and spam filters. These systems carry no specific obligations under the AI Act. However, it is recommended that operators voluntarily adhere to codes of conduct for ethical and trustworthy AI practices. These codes of conduct will take effect 9 months after the Act’s entry into force.

General-Purpose AI Models

General-purpose AI models (GPAI), such as foundation models and generative AI systems capable of performing a wide range of tasks, fall under a separate classification scheme and are subject to strict transparency and risk management requirements. These include self-assessment, risk mitigation, reporting of serious incidents, routine testing, model evaluations and cybersecurity measures, as well as compliance with EU copyright laws. Regulations covering these models will take effect 12 months after the Act’s entry into force.

Penalties

The AI Act sets severe penalties for non-compliance, which will come into effect 12 months after the Act’s entry into force.

Prohibited AI practices: Non-compliance with these prohibitions can result in fines of up to EUR 35 million or up to 7% of a company’s total worldwide annual turnover for the prior year, whichever is greater.

High-risk AI systems: Non-compliance can lead to fines of up to EUR 15 million or up to 3% of a company’s total worldwide annual turnover for the prior year, whichever is greater.

General-purpose AI models: Infringements of these obligations can result in fines of up to EUR 15 million or up to 3% of a company’s total worldwide annual turnover for the prior year, whichever is greater.

Supplying false information: Providing incorrect, incomplete or misleading information to notified bodies or national competent authorities can incur fines of up to EUR 7.5 million or up to 1% of a company’s total worldwide annual turnover for the prior year, whichever is greater.

Conclusion

The AI Act imposes compliance obligations on all parties involved in the lifecycle of AI systems, from development to deployment and distribution. This includes providers, deployers, importers, distributors, product manufacturers, and legal representatives (as non-EU entities are required by the Act to appoint an authorised representative within the EU). Both entities within and outside the EU are therefore responsible for meeting these obligations.

Disclaimer

Disclaimer

This guide contains information for general guidance only and does not substitute professional advice, which must be sought before taking any actions.